Apple Siri conversations are being reviewed by external contractors, it has been confirmed. Worse still, the voice assistant is easily activated, sometimes picking up private conversations such as people talking to their doctor, drug deals and sexual encounters, according to an article by U.K. based news outlet The Guardian.

© Image | Google

01

Apple Siri: I’m listening

Devices such as the Apple Watch are often accidentally activated by a person lifting their wrist, while the assistant often “wakes” to the sound of a user’s zipper, the Apple contractor told The Guardian. Apple does not explicitly state that humans are listening to conversations in its consumer-facing privacy documentation.

The whistleblower told The Guardian they had witnessed countless instances of recordings “featuring private discussions between doctors and patients, business deals, seemingly criminal dealings, sexual encounters and so on.”

© Image | The Guardian

Concerningly, the recordings are accompanied by user data showing “location, contact details, and app data.”

Apple has responded to the claims, saying that only 1% of recordings are used to improve its responses to user requests and to measure when the device is activated accidentally. However, even 1% isn’t a small number: According to figures, there are 500 million Siri enabled devices in use.

© Image | Google

Apple said it does not store the data with Apple ID numbers or names which could identify the person.

The firm told The Guardian: “A small portion of Siri requests are analyzed to improve Siri and dictation. User requests are not associated with the user’s Apple ID. Siri responses are analyzed in secure facilities and all reviewers are under the obligation to adhere to Apple’s strict confidentiality requirements.”

02

Apple Siri isn’t the only eavesdropping voice assistant

Apple Siri is not the only assistant listening to recordings of user requests. In April, it was revealed that Amazon’s voice assistant Alexa was sometimes recording private conversations. Then in July, it emerged that the Google Assistant was doing the same.

© Image | Google

It raises the question of how secure smart speakers are: Millions of people enable these devices within their homes without adding any privacy controls. And the devices are data-hungry–for example unless it is locked down, the Google Assistant has access to your search history.

© Image | Google

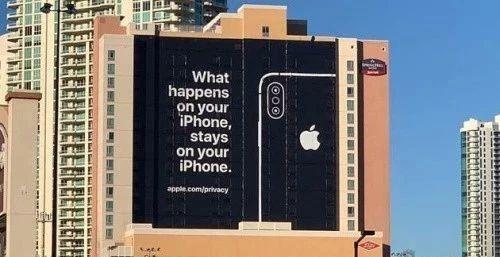

Apple had so far escaped the scrutiny of its rivals, perhaps partly because it purveys a strong message of privacy.

It had a dig at its competitors earlier this year with a billboard advert which read: “What happens on your iPhone, stays on your iPhone.”

© Image | Google

03

Apple Siri is listening: What to do

Unfortunately, it is much easier to control the Amazon Echo and Google Home devices. The best way to prevent Siri from listening to and sometimes accidentally recording conversations is to disable it entirely.

© Image | Google

However, I also received a tip from Pablo Alejandro Fain, a Microsoft certified professional, via Twitter. “For those concerned about Siri’s random activations, you can simply turn off the ‘listen for Hey Siri’ feature without deactivating Siri completely,” he said.

© Image | Google

Simply go into “settings” on your device, “Siri and search” and you can turn off “Listen for Hey Siri”.

It’s down to Apple to give people more defined controls–and perhaps this latest news will compel it to do so. For now, it raises the age-old argument of security versus convenience: Is Apple Siri really that useful to you?

© Image | Google

Personally, I am very wary of voice assistants. The devices were not created with security in mind, and people often forget to lock them down. That is why, despite having secured it as much as possible, I threw my own Google Home in the bin.

Share to let your friends know!

SOURCE |Forbes

|

Guidelines: Transfer Money Overseas Via Alipay! |

| RMB With These Numbers Are All Fake! Pay Attention! | |

| How Much Money Can I Bring When Entering China? |