Less than a day after receiving widespread attention, the deepfake app that used AI to create fake nude photos of women is shutting down.

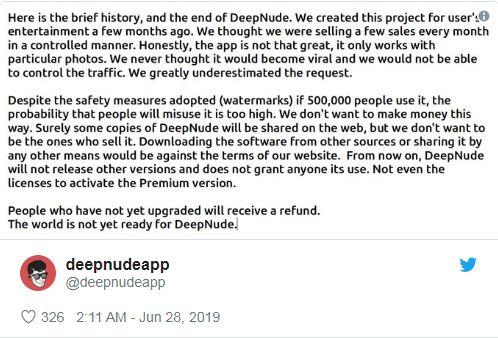

In a tweet, the team behind DeepNude said they “greatly underestimated” interest in the project and that “the probability that people will misuse it is too high.”

© Image | Twitter

DeepNude will no longer be offered for sale and further versions won’t be released.

The team also warned against sharing the software online, saying it would be against the app’s terms of service. They acknowledge that “surely some copies” will get out, though.

Motherboard first drew attention to DeepNude yesterday afternoon.

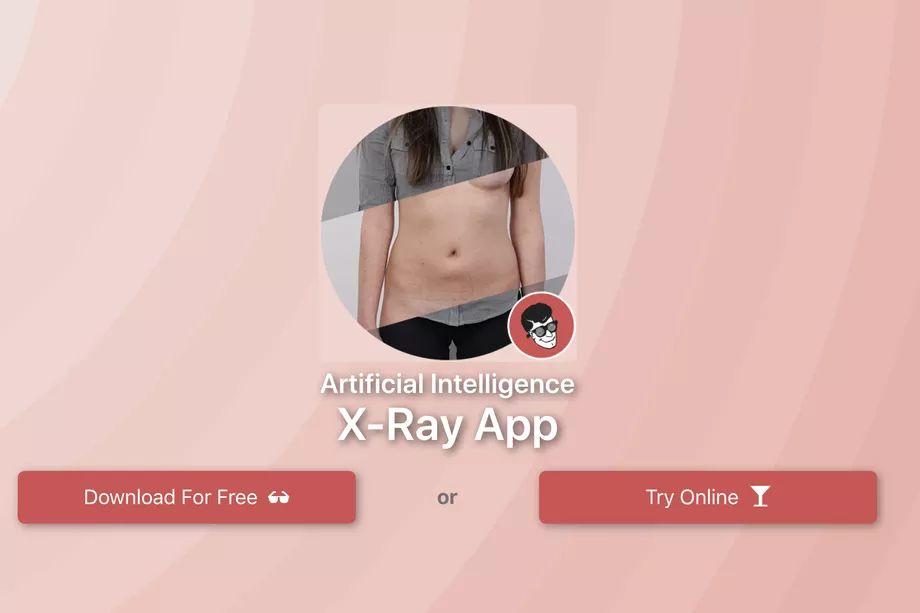

The app, available for Windows and Linux, used AI to alter photos to make a person appear nude and was only designed to work on women.

© Image | Google

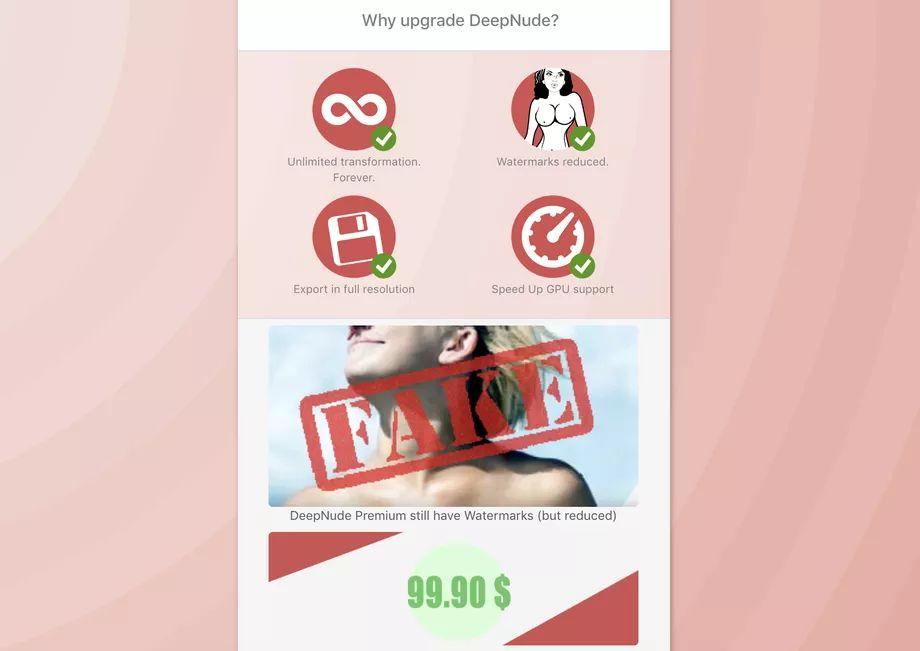

A free version of the app would place a large watermark across the images noting that they were fake, while a paid version placed a smaller watermark in a corner, which Motherboard said could easily be removed or cropped out.

© Image | Google

The app has been on sale for a few months, and the DeepNude team says that “honestly, the app is not that great” at what it does.

But it still worked well enough to draw widespread concern around its usage. While people have long been able to digitally manipulate photos, DeepNude made that ability instantaneous and available to anyone.

Those photos could then be used to harass women: deepfake software has already been used to edit women into porn videos without their consent, with little they can do afterward to protect themselves as those videos are spread around.

© Image | Google

The creator of the app, who just goes by Alberto, told The Verge earlier today that he believed someone else would soon make an app like DeepNude if he didn’t do it first.

“The technology is ready (within everyone’s reach),” he said. Alberto said that the DeepNude team “will quit it for sure” if they see the app being misused.